An overview of articles

OpenBao versus Vault (Enterprise)

OpenBao (A fork of HashiCorp Vault before HashiCorp changed its license to be less-open-source) is developing quickly, so this article may be outdated in a few weeks. It’s now November 2025, please keep that in mind.

Feature comparison

There are differences between OpenBao and Vault (and Vault Enterprise). Let’s compare. I’ll explain details below this table.

| Feature |

OpenBao |

Vault |

Vault Enterprise |

| Namespaces |

✅ |

❌ |

✅ |

| High Availability |

✅ |

✅ |

✅ |

| Auto unsealing |

✅ |

✅ |

✅ |

| Replication |

❌ |

❌ |

✅ |

| Performance standby |

❌ |

❌ |

✅ |

| Auto Snapshot |

❌ |

❌ |

✅ |

| Sentinel |

❌ |

❌ |

✅ |

In general, you can clearly see that OpenBao is a fork of Vault (Community/Open Source) and lacks some “enterprise” features.

Namespaces

Namespaces can be used to offer teams their own piece of OpenBao or Vault. They are administratively separated.

Note: Vault Enterprise offers namespaces, but offering these namespaces to teams introduces a risk for Vault Enterprise only: The “client count” can grow uncontrollably. Since OpenBao does not have the concept of “client-count”, this issue is not applicable to OpenBao.

High Availability

When using (the default and recommended) storage backend “Raft”, high availability is available on OpenBao, Vault, and Vault Enterprise. In general, Raft works really well; the only consideration to keep in mind is that the latency between nodes should be 8ms or less. That’s typically easy to achieve in a single datacenter.

Auto unsealing

OpenBao/Vault will start up in a sealed state. That means the backend where data is stored is encrypted and requires to be unsealed to make the data readable. By default, the “Shamir” method is used to unseal, which is a manual process. Especially in containers, this proves to be difficult to manage; if a pod spins up, it’s sealed and requires manual intervention to be unsealed and available. For this reason, auto-unsealing can help. There are several methods:

| Method |

OpenBao |

Vault |

Vault Enterprise |

| Shamir |

✅ |

✅ |

✅ |

| Cloud key |

✅ |

✅ |

✅ |

| HSM |

✅ |

❌ |

✅ |

| Transit |

✅ |

✅ |

✅ |

| Static |

✅ |

❌ |

❌ |

Note: The “Static” unseal method is nice for development purposes, but not for production use; the unseal key is stored on the node that runs OpenBao, which makes it quite insecure. Great for development though!

Replication

Vault Enterprise supports “replication”. There are two possibilities to use replication:

- Disaster Recovery replication (DR): One Vault Enterprise cluster synchronizes data to another (standby/dr) Vault Enterprise cluster. The other (standby/dr) Vault Enterprise cluster receives data and can be promoted to become a primary in case of a disaster. This promotion is a manual action.

- Performance Replication (PR): One Vault Enterprise cluster synchronizes data to another Vault Enterprise cluster. Both clusters are available for requests. This is typically used to lower the amount of time it takes to request a secret to Vault Enterprise. Typically, PR clusters are spread over the globe.

OpenBao is missing this replication feature. There are some workarounds that can be used to restore service in a disaster:

- Save a snapshot frequently and store it off-site. In a disaster scenario, the remaining infrastructure can be used to spin up (or maybe it was already spun up) an OpenBao cluster and restore the snapshot. This method may sound a bit simplistic. I would, however, recommend considering this very-simple-to-maintain method.

- Use PostgreSQL as a storage backend for Vault. In this scenario, you move the responsibility to synchronize data to PostgreSQL. The PostgreSQL environment that supports synchronization has quite a few moving parts, making it a bit more difficult to implement. I would recommend this pattern if your company/team is capable of delivering such a PostgreSQL environment.

- Spread a cluster over datacenters, having one datacenter with “voters” and one datacenter with “non-voters”. In case the datacenter with the voters is lost, the data is replicated to the non-voter datacenter. With a couple of commands, this non-voter cluster can be changed to a voter-cluster again. There is a limitation of less than 8ms latency between the datacenters.

Note: There is a lot of work planned to support replication.

OpenBao/Vault using Raft have a “leader”-“follower” concept. With OpenBao and Vault (non-enterprise), followers will forward any request to the leader. With Vault Enterprise, a follower can be told to answer read-requests. This makes Vault Enterprise a bit more scalable. There is an issue to implement this on OpenBao.

Note: Writes will always be dealt with by the leader. So the amount of scalability will depend on your workload. Typically, there are more reads than writes.

Auto Snapshot

Making a “snapshot” (A file containing a backup) is an authenticated call. Which makes scripting this a bit annoying. Vault Enterprise has a concept of “auto-snapshot”, where Vault Enterprise is configured to drop a snapshot to a destination and clean up old snapshots.

This is quite a convenient feature, although scripting this is certainly possible. Keep in mind that auto-snapshot cleans up old snapshots according to its configuration. This clean-up has to be configured differently; for example, on S3 a lifecycle policy can be set up.

Note: This snapshot is only applicable to Raft.

Sentinel

Sentinel is a “pre-policy-engine”. It can check for certain conditions before even attempting to fetch (or write) a secret.

Regular policies are mostly sufficient to limit access, but there are edge-cases where Sentinel proves valuable.

I’ve implemented Sentinel a few times for customers and always struggled with the non-intuitive and very product-specific configuration.

OpenBao can use CEL for this. Here too, it’s quite specific and not particularly easy, but it exists.

All versions of Vault support “audit-devices”. These are destinations to store audit logs. Typically “file”, “syslog”, or “socket” are used. OpenBao has an extra type: “http”.

| Device type |

OpenBao |

Vault |

Vault Enterprise |

| file |

✅ |

✅ |

✅ |

| syslog |

✅ |

✅ |

✅ |

| socket |

✅ |

✅ |

✅ |

| http |

✅ |

❌ |

❌ |

Besides the extra “http” type, OpenBao has an extra method to configure the audit device: in the configuration file. This sounds trivial, but it’s quite important. It means all requests, from the very first request, are audited. With Vault, any request done before the audit device is configured is not logged.

| Product |

method(s) |

| OpenBao |

Configuration file AND API calls |

| Vault |

API calls |

OpenBao is sponsored by GitLab. GitLab would like to include a better secrets manager in their GitLab product. Since the HashiCorp Vault license does not allow redistributing Vault in another product, GitLab has invested in the OpenBao project. I just got (November 2025) information that OpenBao will likely be included in GitLab at the beginning of 2026.

Self init is a feature where OpenBao will configure secrets engines, authentication engines, and policies on first launch.

Now that sounds like a small feature, but just as the audit-device difference in OpenBao, it’s quite important. It means no root-token is required anymore; you configure OpenBao to connect to, for example, LDAP and allow certain people to administer OpenBao.

The issue with the root-token is:

- It’s “god-mode”, very powerful.

- It’s anonymous. The audit logs will never tell you WHO has used the root-token.

So with self-init, administrators can log in personally, making it much more traceable who did what. In combination with the audit devices in a configuration file, this closes the gaps that Vault potentially has with anonymous logins and with missed audit lines.

Migration paths

This is the simplest migration. You can (either):

- Replace the

vault binary for openbao. This scenario keeps the data as Vault wrote it and lets OpenBao read the data.

- Make a snapshot from Vault (Community/OSS) and restore that snapshot on OpenBao. This allows you to try the migration before touching production.

Vault Enterprise to OpenBao

This migration proves more difficult. The structure that Vault Enterprise (actually Raft) stores data is incompatible with both Vault (Community/OSS) and OpenBao.

Happily I work for Adfinis where we support many OpenBao/Vault/Vault Enterprise environments and have tooling available to migrate from Vault Enterprise to OpenBao.

Conclusion

OpenBao is pretty capable, in some areas more capable than Vault. I’m expecting OpenBoa to be developed further and cover missing functionality soon.

Openbao highly available (HA) clusters

This article focuses around the design of an Openbao (or Vault) Raft cluster. There is always some doubt when setting up an Openbao cluster using Raft. These are some designs, with a focus to the amount of datacenters that are available.

Note: Openboa is under development, these suggestions are relevant in September 2025. Features for replication are changing rapidly however.

On prem

On prem, maybe using VMWare or so, allows you to run Openbao on your own infrastructure.

Single datacenter

In case a single datacenter is available, your options are limited. This is the design I would recommend.

+--------------------- DC 1 ----------------------+

| +--- load balancer ---+ |

| | | |

| +---------------------+ |

| |

| +--- bao-1 ---+ +--- bao-2 ---+ +--- bao-3 ---+ |

| | | | | | | |

| +-------------+ +-------------+ +-------------+ |

+-------------------------------------------------+

In the ASCII-ART diagram above, you’ll find:

- A load balancer, this can be an F5 LTM, HAProxy, whatever. The load balancer is configured with a health-check to determine what node is the leader.

- 3 Openbao nodes, boa-[1-3]. Any (single) one of those nodes will be the leader, the rest follwers. Only leaders can serve traffic as of now.

Note: When possible, place the 3 bao nodes in different “availability zones”, where network, cooling and power are unrelated to another zone.

Double datacenter

There are some more options here, but still quite some limitations. This is what I would recommend:

+--- DNS ---+

+--------------------| |

| +-----------+

V

+--------------------- DC 1 ----------------------+ +--------------------- DC 2 ----------------------+

| +--- load balancer ---+ | | +--- load balancer ---+ |

| | | | | | | |

| +---------------------+ | | +---------------------+ |

| | | |

| +--- bao-1 ---+ +--- bao-2 ---+ +--- bao-3 ---+ | | +--- bao-1 ---+ +--- bao-2 ---+ +--- bao-3 ---+ |

| | | | | | | | | | OFF | | OFF | | OFF | |

| +-------------+ +-------------+ +-------------+ | | +-------------+ +-------------+ +-------------+ |

+-------------------------------------------------+ +-------------------------------------------------+

Here you see two datacenters, each with a unique Openboa cluster. They don’t synchronize data, as this feature is not available at this moment.

The intent is to let some DNS routing mechanism (F5 GTM or AWS Route 53 with health-checks for example) determine what cluster is available. The cluster on DC 1 is up and running, the cluster on DC 2 is installed, but not initialized. So the health check for the DC 2 cluster will not return an HTTP status of 200.

In case of an disaster, a stored snapshot should be restored to the DC 2 cluster. This is manual labour however.

Triple (or more) datacenter

In case you have 3 datacenters, you can create a cluster as following. Keep in mind the latency between the datacenters needs to be less than 8 ms.

+--- load balancer ---+

| |

+---------------------+

+----- DC 1 ------+ +----- DC 2 ------+ +----- DC 3 ------+

| +--- bao-1 ---+ | | +--- bao-2 ---+ | | +--- bao-3 ---+ |

| | | | | | | | | | | |

| +-------------+ | | +-------------+ | | +-------------+ |

+-----------------+ +-----------------+ +-----------------+

Note: The load balancer is not placed in a DC, it should not be impacted by a disaster. This can be a DNS record as well, or a VIP.

Note: DNS based routing techniques can be slower to populate, as DNS records have an “time to live”. The time to live can be short however.

Alternative

You can move the storage backend to (for example) PostgreSQL. This means quorum is no longer important for Openbao. (PostgreSQL is responsible for getting the data from on data center to the other.

Future

I’m sure Openbao will introduce new features to allow for replication and multi datacenter setups, which would dramatically change the above advices.

FAQ

How many nodes should be in a HA Openbao cluster?

| amount |

effect |

| 1 |

No redundancy, loss of a node is loss of data and service. |

| 2 |

No quorum when a node is lost. This setup is more fragile than 1 node. |

| 3 |

Yes, quorum, finally. You can loose 1 node. |

| 4 |

You can loose 1 node, just as the 3-node setup, no benefit. |

| 5 |

Nice, you have a majority and can loose 2 nodes. Better than 3 nodes. |

| more |

When it’s uneven: fine. |

Note: Nodes should be spread over availability zones. A hyper scaler typically has 3 availability zones.

A typical setup of Openbao has 1 or 3 nodes. I would recommend 3. Sometimes I see a 5 node cluster where there are 3 availability zones, which makes no sense; losing an availability zone has the same effect as a 3 node cluster.

Ansible Python version chart

Ansible (ansible-core) depends on Python. Actually, the version of ansible-core has a strict requirements on the version of Python required.

Here is a table demonstrating the versions of ansible-core and their Python requirement.

Overview

| anisble-core |

python |

| 2.11.* |

>= 2.7, < 3.0 |

| 2.12., 2.13. |

>= 3.8 |

| 2.14., 2.15. |

>= 3.9 |

| 2.16., 2.17. |

>= 3.10 |

As you can see, Ansible version 2.12 was the first version of ansible-core to require python version 3.

TOX

The tool “tox” can be used to test multiple versions of pip modules in an exising Python installation. This is useful locally, to test if a certain python version and ansible version pass tests, or in CI to do these tests automatically.

Here is an example of a tox.ini configuration file.

[tox]

# Make a list of environments to test. This is a combination of python version and ansible version.

# Be aware, TOX does not install Python, the host that TOX runs on must have the required Python version(s) installed.

# A missing python version will result in a `SKIP`.

envlist =

python2.7-ansible-2.11

python3.8-ansible-2.12, python3.8-ansible-2.13

python3.9-ansible-2.14, python3.9-ansible-2.15

python3.10-ansible-2.16, python3.10-ansible-2.17

# These setting apply for all environments, listed further down.

[testenv]

commands = molecule test

# Some environment variables that are passed to the test environment.

# These variables are used by molecule, which allows you to run:

# $ image=ubuntu tag=latest molecule test.

# The DOCKER_HOST is set because I run docker on a remote host.

passenv =

namespace

image

tag

DOCKER_HOST

# Set some environment variables for the test environment.

# The TOX_ENVNAME is used to make molecule display a hostname, including the environment name.

setenv =

TOX_ENVNAME={envname}

# The environments specified above, under the `envlist` section, should now define the Python version and the Ansible version.

[testenv:python2.7-ansible-2.11]

basepython = python2.7

deps =

# Pickup the pip requirements from the requirements.txt file.

-rrequirements.txt

# Set a specific version of ansible-core.

ansible-core == 2.11.*

[testenv:python3.8-ansible-2.12]

basepython = python3.8

deps =

-rrequirements.txt

ansible-core == 2.12.*

[testenv:python3.8-ansible-2.13]

basepython = python3.8

deps =

-rrequirements.txt

ansible-core == 2.13.*

[testenv:python3.9-ansible-2.14]

basepython = python3.9

deps =

-rrequirements.txt

ansible-core == 2.14.*

[testenv:python3.9-ansible-2.15]

basepython = python3.9

deps =

-rrequirements.txt

ansible-core == 2.15.*

[testenv:python3.10-ansible-2.16]

basepython = python3.10

deps =

ansible-core == 2.16.*

-rrequirements.txt

[testenv:python3.10-ansible-2.17]

basepython = python3.10

deps =

ansible-core == 2.17.*

-rrequirements.txt

The above configuration (tox.ini) works with a requirements.txt:

molecule

molecule-plugins[docker]

ansible-lint

Good luck testing!

Event Driven Ansible

Okay, so RedHat has released Event Driven Ansible. It’s a new way to execute playbooks, based on events.

These events originate from sources, a couple to highlight:

- Kafka: If a certain message is found on the bus, execute a playbook.

- Webhook: If a certain URL (on the Ansible Automation Platform Event Driven Ansible node) is called, execute a playbook.

This feels like a new way to remediate issues in an environment, for example: If a monitoring system detects a situation, call Ansible to remediate.

I’ve worked at companies that did “self healing” as a core-business, my conclusion so far.

If something is broken, there is a problem with the design. For example:

- If a disk is full, you may want to clean-up/compress data. The actual solution would be to add more storage.

- If a service stops unexpectedly, you may want to restart it. The actual problem is that the software needs to be fixed or the configuration needs to be improved.

Anyway, even with the above in mind, here is how Event Driven Ansible works.

+--- event source ---+ +--- event driven ansible ---+ +--- ansible controller ---+

| Kafka | > | Rulebook | > | Playbook |

+--------------------+ +----------------------------+ +--------------------------+

Now Ansible Automation Platform has the Ansible Controller, on the right in the diagram above. AAP has an API already, so it feels somewhat redundant to have Event Driven Ansible. But. A difference is that Event Driven Ansible can react to something.

Use-cases I can think of:

- A change starts, Event Driven Ansible can put a node in maintenance mode.

- An issue occurs, Event Driven Ansible collects information about a node. This means the engineer in charge can start troubleshooting with the latest information.

- A deployment of a new node is done, Event Driven Ansible can configure the node. (Drawback; it becomes quite unpredictable when the node is ready, as this happens asynchronously from the deployment.)

- A node is removed, Event Driven Ansible can remove the node from monitoring/backup/etc.

I’m looking forward to see where Event Driven Ansible will go to in the future, it’s at least a new way to interact with Ansible.

If you have ever installed Tower or Ansible Automation Platform, you may know that it’s pretty specific. Here are my suggested improvements to the installer.

1. Output is cluttered

The output of the installer does not use loop_control.label causing the output to look something like this:

TASK [ansible.automation_platform_installer.repo_management : Install the Automation Platform bundle repository] ***

changed: [myaap-1.example.com] => {"changed": true, "checksum": "180eafb0ddf80e87f464c359358238a8aed1374b", "dest": "/etc/yum.repos.d/ansible-automation-platform.repo", "gid": 0, "group": "root", "md5sum": "36032c7104c2c8bfa11093eae71f54cb", "mode": "0644", "owner": "root", "secontext": "system_u:object_r:system_conf_t:s0", "size": 268, "src": "/root/.ansible/tmp/ansible-tmp-1718028961.09427-63855-258049482086701/source", "state": "file", "uid": 0}

changed: [myaap-0.example.com] => {"changed": true, "checksum": "180eafb0ddf80e87f464c359358238a8aed1374b", "dest": "/etc/yum.repos.d/ansible-automation-platform.repo", "gid": 0, "group": "root", "md5sum": "36032c7104c2c8bfa11093eae71f54cb", "mode": "0644", "owner": "root", "secontext": "system_u:object_r:system_conf_t:s0", "size": 268, "src": "/root/.ansible/tmp/ansible-tmp-1718028961.074965-63853-127923363074838/source", "state": "file", "uid": 0}

changed: [myaap-database.example.com] => {"changed": true, "checksum": "180eafb0ddf80e87f464c359358238a8aed1374b", "dest": "/etc/yum.repos.d/ansible-automation-platform.repo", "gid": 0, "group": "root", "md5sum": "36032c7104c2c8bfa11093eae71f54cb", "mode": "0644", "owner": "root", "secontext": "system_u:object_r:system_conf_t:s0", "size": 268, "src": "/root/.ansible/tmp/ansible-tmp-1718028961.0822551-63852-33509642687031/source", "state": "file", "uid": 0}

changed: [myaap-2.example.com] => {"changed": true, "checksum": "180eafb0ddf80e87f464c359358238a8aed1374b", "dest": "/etc/yum.repos.d/ansible-automation-platform.repo", "gid": 0, "group": "root", "md5sum": "36032c7104c2c8bfa11093eae71f54cb", "mode": "0644", "owner": "root", "secontext": "system_u:object_r:system_conf_t:s0", "size": 268, "src": "/root/.ansible/tmp/ansible-tmp-1718028961.079015-63857-51597012965829/source", "state": "file", "uid": 0}

As a consumer of this installer, I do not need all the clutter.

2. Some tasks are allowed to fail

TASK [ansible.automation_platform_installer.config_dynamic : Check if node is registered with RHSM] ***

fatal: [myaap-0.example.com]: FAILED! => {"changed": true, "cmd": ["subscription-manager", "identity"], "delta": "0:00:01.056821", "end": "2024-06-10 14:12:26.504043", "msg": "non-zero return code", "rc": 1, "start": "2024-06-10 14:12:25.447222", "stderr": "This system is not yet registered. Try 'subscription-manager register --help' for more information.", "stderr_lines": ["This system is not yet registered. Try 'subscription-manager register --help' for more information."], "stdout": "", "stdout_lines": []}

...ignoring

This one can be solved by setting failed_when to false and using a when condition in a follow-up task.

Or this one:

TASK [ansible.automation_platform_installer.preflight : Preflight check - Read in controller version] ***

fatal: [myaap-1.example.com]: FAILED! => {"changed": false, "msg": "file not found: /var/lib/awx/.tower_version"}

...ignoring

This task should be preceded with a ansible.builtin.stat task and only attempt to run the described task when the file exists.

3. Command used too often

Some tasks use the ansible.builtin.command module, where a “real” Ansible module is available. For example:

TASK [ansible.automation_platform_installer.redis : Enable Redis module] *******

changed: [myaap-2.example.com] => {"changed": true, "cmd": ["dnf", "module", "-y", "enable", "redis:6"], "delta": "0:00:04.711806", "end": "2024-06-10 14:18:47.991515", "msg": "", "rc": 0, "start": "2024-06-10 14:18:43.279709", "stderr": "", "stderr_lines": [], "stdout": "Updating Subscription Management repositories.\nUnable to read consumer identity\n\nThis system is not registered with an entitlement server. You can use subscription-manager to register.\n\nLast metadata expiration check: 0:00:06 ago on Mon 10 Jun 2024 02:18:37 PM UTC.\nDependencies resolved.\n================================================================================\n Package Architecture Version Repository Size\n================================================================================\nEnabling module streams:\n redis 6 \n\nTransaction Summary\n===================================================

Here the module community.general.dnf_config_manager could have been used, making the task idempotent.

There are likely some tasks that require ansible.builtin.command in which case changed_when can be used to assert if a change was done or not.

4. Inconsistent names are uses

Some tasks end with a period, some do not. Here is an example:

TASK [ansible.automation_platform_installer.repo_management : Remove legacy ansible-tower repository] ***

TASK [ansible.automation_platform_installer.repo_management : Install the Automation Platform yum repository.] ***

5. Invalid (inventory) configuration are possible.

The Ansible Automation Platform setup used an inventory to explain what the installer should do. There are many invalid configuration possible. This means the installer will start to configure the instances, but fail with an “abstract” error at some moments.

Conclusion

The installer of Ansible Automation Platform does work, there are many interesting patterns used, but there is also room for improvement. As this installer feels a bit like a show-case for Ansible, I do not like the fragility of this installer.

Molecule debugging

Using Molecule really helps to develop Ansible roles. (and collections.) But, when things don’t go as planned, it can be a little more challenging to understand what’s wrong.

When Molecule runs, you see the Ansible output, something like this:

TASK [ansible-role-auditd : Start and enable auditd] ***************************

fatal: [auditd-fedora-rawhide]: FAILED! => {"changed": false, "msg": "A dependency job for auditd.service failed. See 'journalctl -xe' for details.\n"}

Now what

There are a couple of options:

- You can login to the instance Molecule created. You need to let the instance exist by running

molecule test --destroy=never. Next you can run molecule login to jump into the instance Molecule was running against. You can now troubleshoot the instance.

- You can put Ansible in debug mode:

ANSIBLE_DEBUG=True molecule test.

- You can increase verbosity:

ANSIBLE_VERBOSITY=5 molecule test.

- You can put Ansible in diff mode:

DIFF_ALWAYS=True molecule test.

- You can leave Ansible created scripts:

ANSIBLE_KEEP_REMOTE_FILES=True molecule test. This allows you to inspect the scripts Ansible placed on the target.

In my case ANSIBLE_VERBOSIY is set to 5: ANSIBLE_VERBOSITY=5 molecule test:

TASK [ansible-role-auditd : Start and enable auditd] ***************************

task path: /Users/robertdb/Documents/github.com/robertdebock/ansible-role-auditd/tasks/main.yml:44

Running ansible.legacy.service

...

fatal: [auditd-fedora-rawhide]: FAILED! => {

"changed": false,

"invocation": {

"module_args": {

"arguments": "",

"enabled": true,

"name": "auditd",

"pattern": null,

"runlevel": "default",

"sleep": null,

"state": "started"

}

},

"msg": "A dependency job for auditd.service failed. See 'journalctl -xe' for details.\n"

}

PLAY RECAP *********************************************************************

auditd-fedora-rawhide : ok=42 changed=0 unreachable=0 failed=1 skipped=3 rescued=0 ignored=0

(Still no clue what’s wrong by the way, but at least I see more of what Ansible is trying to do.)

Hope this helps you get unblocked with your Ansible/Molecule issue!

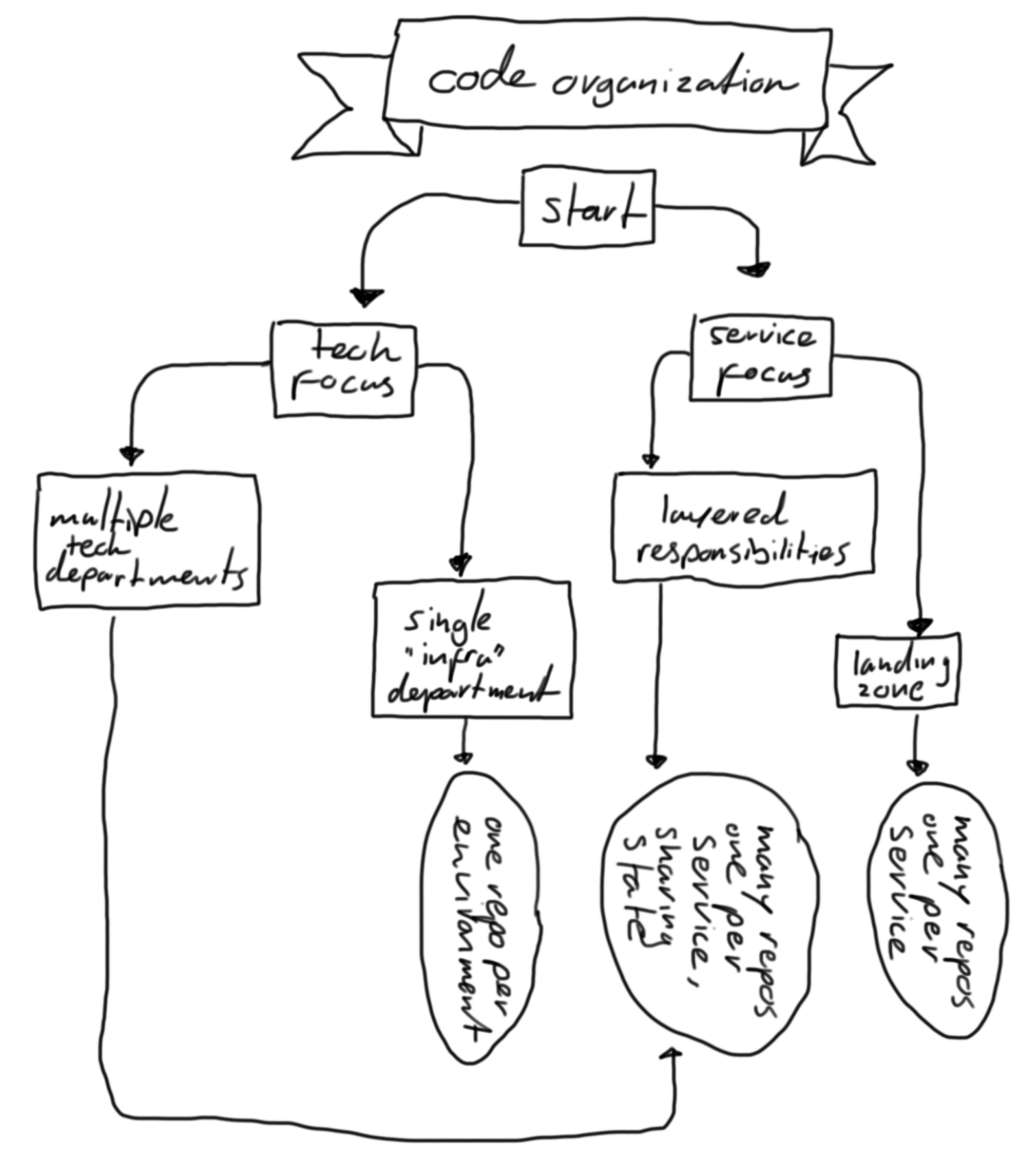

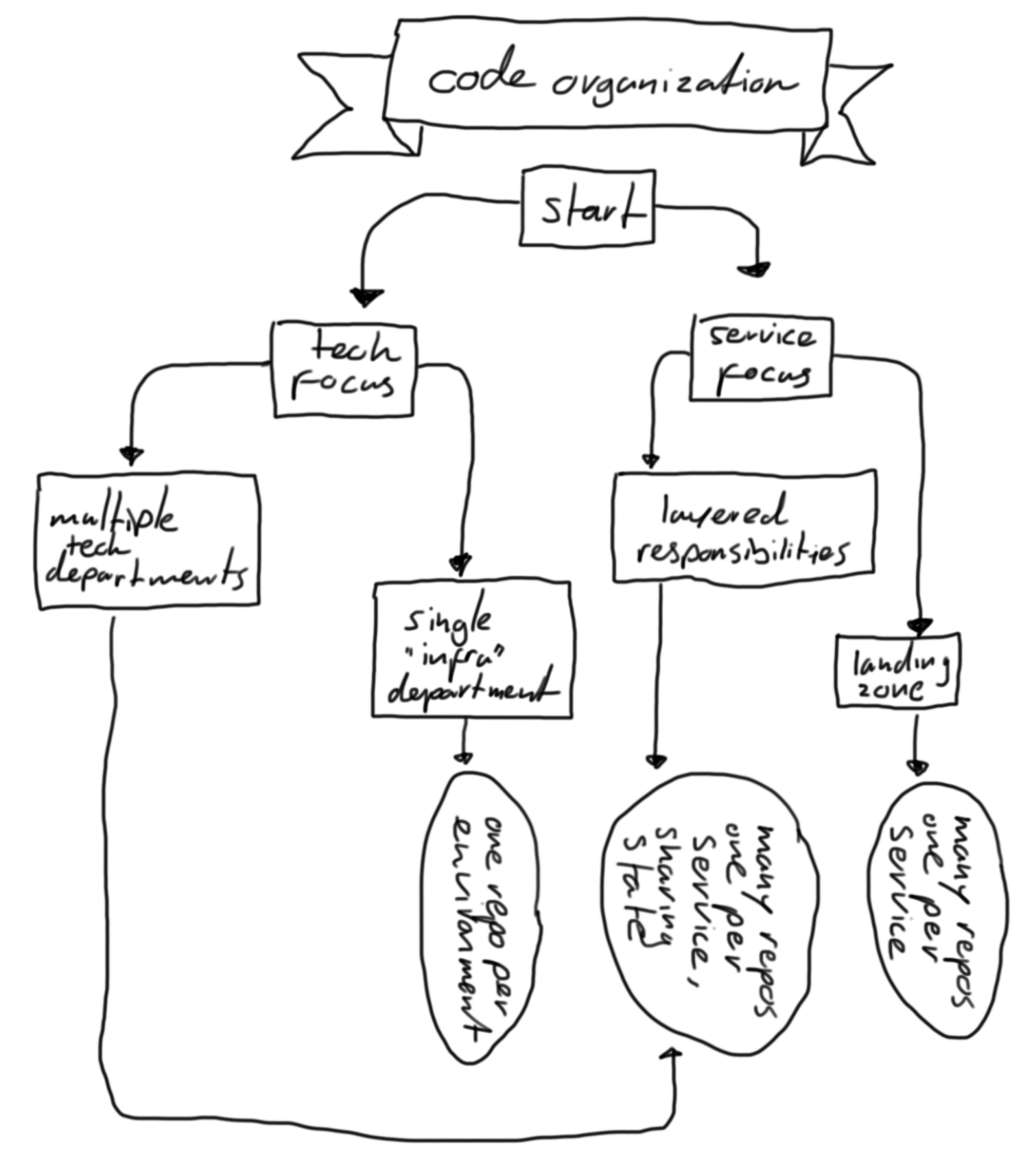

Code Organization

Context: I work in projects for customers. Mostly involving Ansible and Terraform. These customer range in size from about 100 employees to 100.000 employees.

Code organization?

After learning and adopting a technology, like Terraform on Ansible, a question that will come up is: “How do we structure all that code in a maintainable way?”. With this article I hope to explain what options there are and which option usually works.

NOTE: This is not exact science. This is my opinion and experience. I’m sure there are other ways to do this, with good reasons.

The organization types

I mostly see these types of organizations:

- “TECH ORIENTED” - There are departments like

network, linux, windows, databases and so on. Departments depend on each other, a database needs to be installed on for example linux, which needs to be on a network.

- “SERVICE ORIENTED” - There are departments based on business value, for example

mobile-app, morgages, parts-warehouse, it-support. These departments can be completely independent from each other, having their own release cycles and an outage to one service, does not affect the other services.

- “ENVIRONMENT ORIENTED” - Much less common, but there are

development, testing and production departments. Knowledge of all services is requied at eacht department. An “environment” can be broken, not influencing the other environments. (These departments can also be per datacenter, cloud or country for example.)

Decide

I’ve tried to draw (Yes, artschool does pay off.) a decision chart to help you decide which organization type to choose.

[ ]

]

The outcome contains a few of terms that need more explanation:

- “ONE REPO PER ENVIRONMENT” - Here, a single department maintains all code to provision and configure infrastructure. Typical for a smaller company with a couple of “system administrators”. In this situation, the blast-radius of a mistake can be huge.

- “MANY REPOS, ONE PER SERVICE, SHARING STATE” - This means that each service has it’s own repository, but they need something from each other. For example, the

network is shared, so as a department, you should connect to the currect subnets.

- “MANY REPOS, ONE PER SERVICE” - This means that each service has it’s own repository. This is a good way to go if you have a lot of services and they are not dependent on each other. This way you can have a release cycle per service.

My opinion

Hope this information helps a bit in deciding how to setup your repositories.

How I setup sandboxes

Context: I work in projects for customers. Mostly involving Ansible, Terraform, GitLab, Vault and Terraform Enterprise.

So here is a method I use to manage my work. It helped me over and over again in the last few years. This method does cost some time at the beginning of a project, but has saved me many hours later in the project.

At the start of a project I’ll keep my eyes and ears open to understand the environment I will be working in. These details include:

- What infrastructure (VMWare, AWS, GCP, Azure, etc.)

- What services relate to the thing I’m helping with. (LDAP, GitLab, OpenShift, etc)

- How is the network segregated.

- What issues are there at the moment.

I’ll mostly know what the deliverable is up-front. Sometimes it’s difficult to visualize what is expected anyway, that’s just something I’m not very good at. A deliverable can be something like:

- Upgrade the application.

- Scale up the application.

- Do a performance tests.

- Make a health-check report.

Note, that’s just an example, but it’s something along those lines.

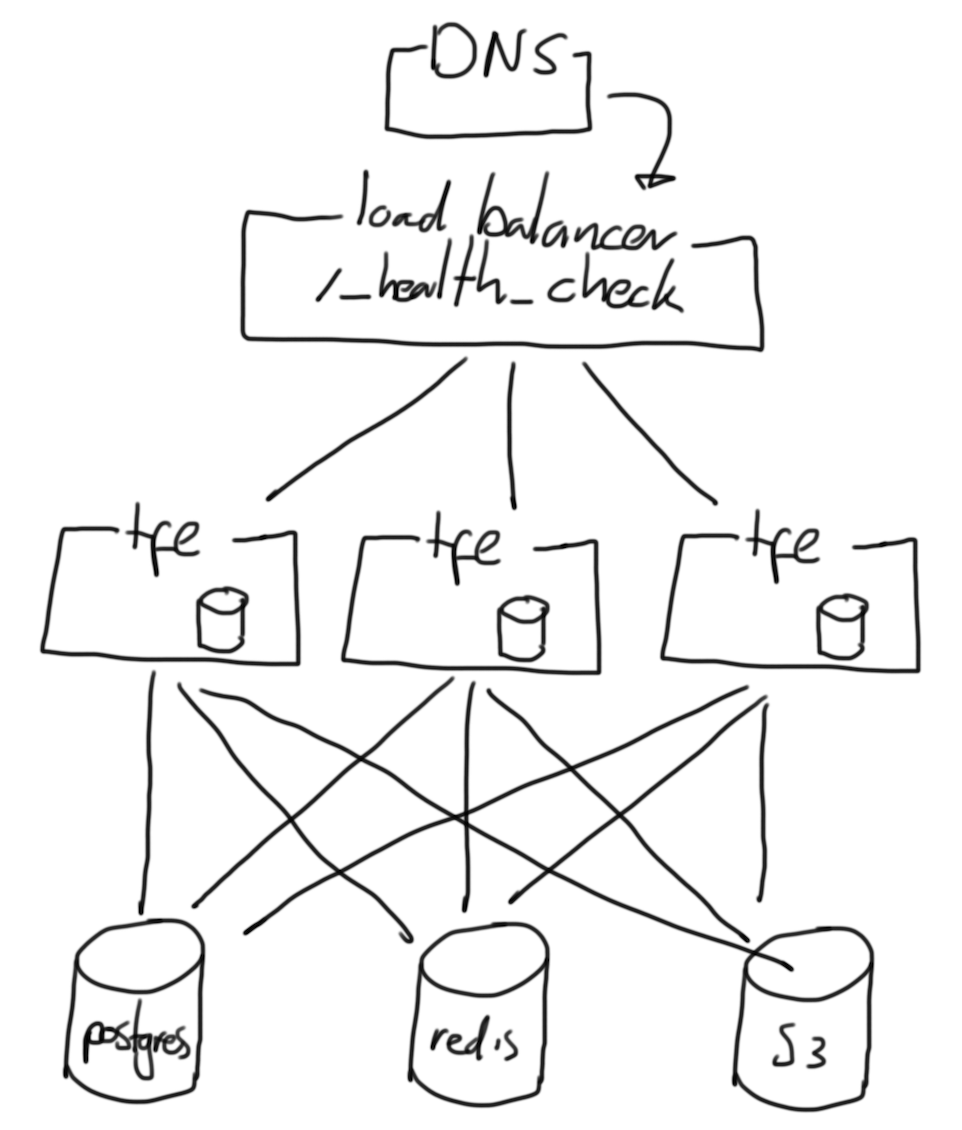

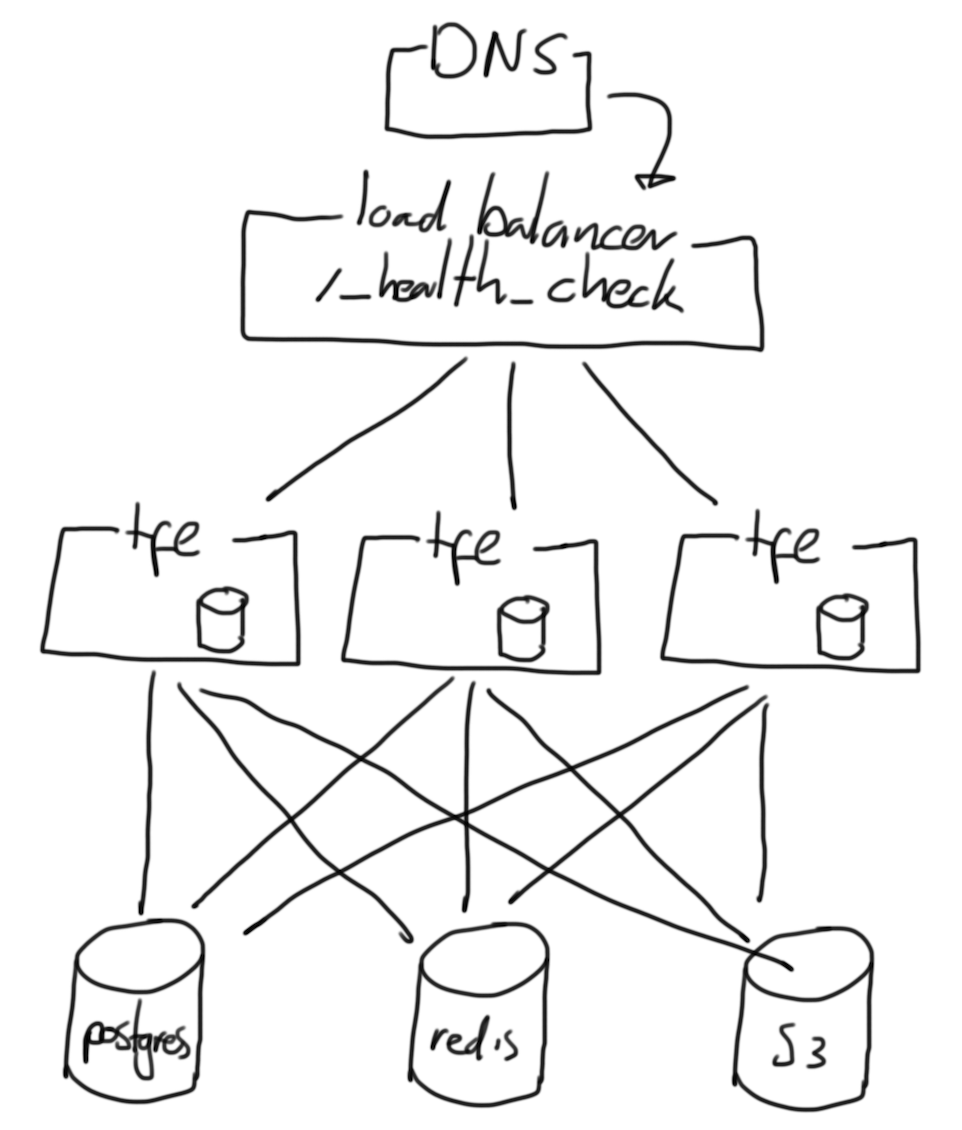

Anyway, once I know what to do and know where to do it, I’ll start drawing. Images like this, quite abstract:

This imaging gives me a clue how to start coding. Coding I’ll mostly use Terraform to deploy infrastructure. It nearly always ends up with some instances, a load-balancer and everything required to support the application.

When using instances, It’s quite annoying that the IP/hostname keeps changing every deployment. So I’ll let Terraform write an ssh-configuration file.

First; I’ll include a conf.d directory from ~/.ssh/config:

(And just to be explicit, this is done manually, once, not by Terraform.)

Next I’ll let Terraform write an ssh configuration using a template (templates/ssh_config.tpl):

%{ for index, instance in instances ~}

Host instance-${ index }

HostName ${ instance }

User ec2-user

IdentityFile ${ ssh_key_path }

%{ endfor }

In the above example; a loop is created using instances. The index is used to count in what loop we’re in.

Next, I’ll let Terraform place the ~/.ssh/conf.d/SOME_PROJECT.conf file:

# Place the SSH configuration.

resource "local_file" "ssh_config" {

content = templatefile("${path.module}/templates/ssh_config.tpl", {

ssh_key_path = local_sensitive_file.default.filename

instances = aws_instance.default[*].public_ip

})

filename = pathexpand("~/.ssh/config")

}

You see that both ssh_key_path and instances are sent to the template. And what’s missing here is all the code to make the instances and sensitive files. You’ll find many other articles describing that process.

Now if you terraform apply, Terraform will create instances and drop a .ssh config file, so you can simply type ssh instance-0. Also good for Ansible, because Ansible uses the .ssh configuration as well. So the inventory can simply contain the hostname instance-0. (And Terraform can create that inventory as well!)

What really helps here too, is to write modules/roles/libraries that can be re-used. This is an extremely time-consuming process, not always possible in a budget, but is a quick win for future projects. Such a next project would simply be an Ansible playbook for example that lists a couple of Ansible roles and variables. In some cases it’s difficult to justify the time-investment, but I always try.

Now comes the ethical part; What do customers actually pay for? My time? A deliverable? The truth is probaly somewhere in the middle. I work for Adfinis, so using the above pattern has a couple of advantages for both me/us and the customer or project:

- We’re reusing code that’s in use elsewhere. It probalby works, I don’t need to worry too much about the technicalities.

- It much quicker to build a production-like sandbox.

- It’s easier to collaborate; a colleague can also spin up a sandbox.

Anyway, hope this is useful to you, hope you get the benefits of this method, just as I do!

Ansible lookup or conditionals

There are multiple ways to how Ansible deals with differences in distributions. Take these 2 examples:

Here is an example that uses when to differentiate for different situations:

- name: Install packages

ansible.builtin.package:

- name: python3-docker

when:

- ansible_os_family == "Debian"

The example above installs python3-docker if the managed node is “Debian”. (ansible_os_family includes Debian, Ubuntu and others.

Another method is to make a map of distributions and define the required packages:

_my_packages:

Debian:

- python3-docker

my_packages: "{{ _my_packages[ansible_os_family] | default([]) }}"

Above you see the map _my_packages. It’s basically a variable that is a dictionary or map. In this case the intent is to map different operating systems to a list of packages.

The my_packages variable looks up a value from the _my_packages map. The results in:

python3-docker when the ansible_os_family is Debian.[] (an empty list) for any other ansible_os_family.

And just to complete the example: I prefer this method:

# vars/main.yml

_my_packages:

default: []

Debian:

- python3-docker

my_packages: "{{ _my_packages[ansible_os_family] | default('default') }}"

# tasks/main.yml

- name: Install packages

ansible.builtin.package:

- name: "{{ my_packages }}"

As a maintainer of the above code, you can focus on the _my_packages map/dict. In my experience, this is simpler; Think of the logic once (tasks/main.yml or playbook.yml) and focus on “data” later. (vars/main.yml or anywhere else you’d put variables.)

By the way, I’m not sure how to call this mechanism, I guess “lookup” is pretty close.

Conclusion

You can use either of the above examples; I prefer the lookup method, but I see others using the conditional method more often.

The best argument for me is ease of maintentance or readability.

Ansible Cheat Sheet

Besides the many modules, there are actually not that many tricks to Ansible. I’m assuming you know a bit about Ansible and would like to explore the edges what can be done with Ansible.

Conditions

Conditionals determine when a task should run. You can check to see if something is in the state it should be before executing the task.

- name: Do something

ansible.builtin.debug:

msg: "I will run if some_variable is defined."

when:

- some_variable is defined

The task Do something will on run if some_variable is set. You can test many things.

Conditions can be applied to:

- Tasks

- Blocks

- Playlists

- Roles

Good to know: a when: statement accepts strings (when: "" == "hello') or can accept lists:

- name: Do something

ansible.builtin.debug:

msg: "I will run if some_variable is defined."

when:

- some_variable is defined

- somm_variable == "hello"

Determining what makes a task changed or failed.

Sometimes Ansible needs some help determining what the result of a task is. For example the ansible.builtin.command module returns changed, because Ansible assumes you’ve modified the node. In these cases, you will need to tell Ansible when it’s changed. The same can be used to fail a task.

- name: Do something that does not change the system

ansible.builtin.command:

cmd: ls

changed_when: no

- name: Do something and help Ansible determine when the task is changed

ansible.builtin.command:

cmd: some_command_that_can_change_things

register: my_command

changed_when:

- '"modified" in my_command.stdout'

failed_when:

- '"error" in my_command.stdout'

The first example never changes, in this case, that’s true; ls is a read-only command.

The second example reports changed when modified can be found in the output. As you can see, you do need to use register to save the output of the command.

Ansible reports failed when error is found in the output.

Note: Ansible will fail by default if the cmd returns a non-0 exit code.

command, shell or raw.

Notify and handlers

Handlers are used to run after some task changed. It’s useful to “gather” handlers and run them at the end. A classic example:

tasks:

- name: Place a configuration.

ansible.builtin.template:

src: some_config.conf.j2

dest: /etc/some_config.conf

notify:

- Restart some_service

handlers:

- name: Restart some_service

ansible.builtin.service:

name: some_service

state: restarted

In the above snippet, the task places a configuration and informs the handler Restart some_service. This restart will occur at the end of a play.

There are some tricks:

It’s a list!

The notify argument takes a list:

- name: Do something

ansible.builtin.command:

cmd: ls

notify:

- First handler

- Second handler

I like to spread things vertically, it improves readability.

Ordering

The order of the handlers is determined the order of the tasks as written in the handlers. Take this example:

handlers:

- name: First

# Something

- name: Second

# Something

- name: Third

# Something

Using the task in the handlers above, the order will always be:

FirstSecondThird

(And a handler can be skipped by not notifying it.)

Chaining

You can chain handlers. Sometimes this helps to get a role idempotent.

tasks:

- name: Do the first thing

ansible.builtin.command:

cmd: ls

notify:

- Do the second thing

handlers:

- name: Do the second thing

ansible.builtin.command:

cmd: ls

notify:

- Do the third thing

- name: Do the third thing

ansible.builtin.command:

cmd: ls

You can create an not-so-infinite loop, by letting handler_one call handler_two and let handler_two call handler_one. This loops up to 3 times, not sure what prevents Ansible from an infinite loop, but thanks Ansible developers!

Conditions

You can add a condition to a handler, just like any other task.

- name: I am a handler

ansible.builtin.command:

cmd: ls

when:

- some_variable is defined

Running handlers now

If required, you can tell Ansible to run the handlers right now. You’d typically wait until the end of the play, but there are certainly situations where the handlers need to run right now.

tasks:

- name: Do something

ansible.command.command:

cmd: ls

notify:

- Some handler

- name: Flush the handlers

ansible.builtin.meta: flush_handlers

- name: Do other things

ansible.command.command:

cmd: ls

handlers:

- name: Some handler

ansible.command.command:

cmd: ls

The order of the above tasks will be:

- Do something

- Flush the handlers

- Some handler

- Do other things

Delegating tasks

There are not many use-cases for delegate_to, but some will apply. Consider this snippet:

- name: Do something to the API

ansible.builtin.uri:

url: "https://some.host.name/api/v1/user

method: GET

delegate_to: localhost

The example above gets information from an API. There is no specific need to run this API call on the targeted node. In such cases, you can use delegate_to.

The examples I can think of:

- Using the

ansible.builtin.uri module.

- Copying files between nodes using the

ansible.posix.synchronize module.

Running a job just once

In some situations you want to do something to just one node from all targeted nodes.

tasks:

- name: Do something

ansible.builtin.command:

cmd: ls

run_once: yes

These situations may call for run_once, there are probably many more:

- Selecting a host that will become a cluster leader/master/primary.

- Checking if a variable is set correctly.

- Using

delegate_to to do something once locally.

- Managing a cluster that’s also created in the same role.

When writing Ansible roles, checking user input ensures your role can work. Imagine you are asking for a list, but a user specifies a boolean.

# This is a list.

my_variable_list:

- one

- two

- three

# This is a string, not a list.

my_variable_list: hello

In the example above, the same varialbe is set twice (last one wins). The bottom one seems incorrect, as it’s not a list.

You can use at least two methods to verify if the input is correct; argument_specs or assert.

argument_specs

The documentation contains all details, but let’s take one example:

---

argument_specs:

main:

short_description: Install and configure Elasticsearch on your system.

description: Install and configure Elasticsearch on your system.

author: Robert de Bock

options:

elasticsearch_type:

type: "str"

default: oss

description: "The license to use, `elastic` uses the \"Elastic\" license, `oss` uses the \"Apache 2.0\" license."

choices:

- elastic

- oss

elasticsearch_network_host:

type: "str"

default: "0.0.0.0"

description: The IP address to bind on.

elasticsearch_network_port:

type: "int"

default: 9200

description: The port to bind on.

elasticsearch_discovery_seed_hosts:

type: "list"

default: []

description: Provides a list of the addresses of the master-eligible nodes in the cluster.

elasticsearch_cluster_initial_master_nodes:

type: "list"

default: []

description: Sets the initial set of master-eligible nodes in a brand-new cluster.

Benefits of using arguments_specs include:

- Fast

- Built in (since +- 2020)

- Can be used to generate docs.

A limitation is that you can only test a single variable, no combinations and the tests on that single variable are limited.

assert

You can also ansible.builtin.assert variables.

---

- name: Include assert.yml

ansible.builtin.import_tasks: assert.yml

run_once: yes

delegate_to: localhost

---

- name: Test if elasticsearch_type is set correctly

ansible.builtin.assert:

that:

- elasticsearch_type is defined

- elasticsearch_type is string

- elasticsearch_type in [ "elastic", "oss" ]

quiet: yes

- name: Test if elasticsearch_network_host is set correctly

ansible.builtin.assert:

that:

- elasticsearch_network_host is defined

- elasticsearch_network_host is string

quiet: yes

- name: Test if elasticsearch_http_port is set correctly

ansible.builtin.assert:

that:

- elasticsearch_http_port is defined

- elasticsearch_http_port is number

- elasticsearch_http_port >=1

- elasticsearch_http_port <= 65536

quiet: yes

- name: Test if elasticsearch_discovery_seed_hosts is set correctly

ansible.builtin.assert:

that:

- elasticsearch_discovery_seed_hosts is defined

- elasticsearch_discovery_seed_hosts is iterable

quiet: yes

- name: Test if elasticsearch_cluster_initial_master_nodes is set correctly

ansible.builtin.assert:

that:

- elasticsearch_cluster_initial_master_nodes is defined

- elasticsearch_cluster_initial_master_nodes is iterable

quiet: yes

This does something similar as the argument_specs described earlier.

Benefits of assert include:

- You can test more things of a single variable.

- You can set an error message.

- You can combine tests on multiple variables.

Determine the amount of nodes to run on simultanious.

Sometimes you want to limit the number of nodes being addressed at one time. One-host-at-a-time for example:

- name: Do something machine-by-machine

hosts: web_servers

serial: 1

...

In the example above, only one node will be targeted at a time. This can be useful for updates to hosts behind a load balancer for example.

Host by host or as fast as possible

The normal strategy of Ansible is linear: Execute one task on targeted nodes, after that, continue with the next task:

TASK [Do step one] ***

changed: [node_1]

changed: [node_2]

changed: [node_3]

TASK [Do step two] ***

changed: [node_1]

changed: [node_2]

changed: [node_3]

You can also instruct Ansible to “go as fast as possible” by setting the strategy to free. This runs each task on targeted nodes, but does not wait until all hosts have completed a task. This also means there are limitations; when ordering of the tasks is important, this strategy can’t be used.

- name: Do something as fast as possible

hosts: web_servers

strategy: free

tasks:

- name: Update all software

ansible.builtin.package:

name: "*"

state: latest

notify:

- Reboot

handlers:

- name: Reboot

ansible.builtin.reboot:

The above sequence runs the jobs (Update all software and Reboot) as fast as possible; one node may be updating, the other can be rebooting, or even already be finished.

Grouping tasks

In a task-list it can be useful to group tasks. Likely because they share something. (A notify or when for example.) You can even group tasks because you like it, or if it increases readability.

- name: Do something to Debian

when:

- ansible_distribution == "Debian"

notify:

- Some Debian handler

block:

- name: Taks 1

...

- name: Task 2

The block (Do something to Debian) shares a notify and when for both tasks.

- When the condition (

when) is met, two tasks will run.

- When any of the tasks is

changed, the handler Some Debian handler will be called.

Catching failures

The block statement can also be used to “catch” a possible failure.

- name: Do something

hosts: web_servers

become: no

gather_facts: no

tasks:

- name: Start the webserver

block:

- name: Start httpd

ansible.builtin.service:

name: httpd

state: started

rescue:

- name: Install httpd

ansible.builtin.package:

name: httpd

always:

- name: Show a message

ansible.builtin.debug:

msg: "Hello!"

Although the example above makes no sense, it does demonstrate how the rescue statement works. “If starting httpd fails, install httpd.” (What’s incorrect here is that there can be many more reasons for httpd to fail, plus if it fails, httpd would be installed, but never started. Weird.

The alwasy will run anyway, no matter what the first (Start httpd) task does.

I’m not a big fan of block, rescue & always, it increases complexity, can possible show red output (errors), which can throw of people. I would rather use some tasks sequence that first checks things, and based on the results, execute some action.

Variables

Wow, this is going to be a large chapter. Variables can be set and used in many ways in Ansible. Let’s cover a few edge-cases.

Gathering facts

facts are details of a node, for example ansible_distribution. These can be used anywhere to make desicions. A common way to use this:

- name: Do something on Debian systems

ansible.builtin.debug:

msg: "I am running ."

when:

- ansible_distribution == "Debian"

You can also map values to a distribution in vars/main.yml:

_package_to_install:

Debian: x

RedHat:

- y

- z

package_to_install: ""

The package_to_install variable can now be used in a regular task, for example: tasks/main.yml

- name: install package

ansible.builtin.package:

name: ""

You can also introduce a default value:

_package_to_install:

default: x

Debian: y

RedHat: z

package_to_install: ""

This way of working allows the tasks/main.yml to be readable.

Use a variable from another host

Image you have a couple of hosts and you’ve set_fact or register-ed something on node-1 that you need on node-2.

- name: Set a variable on node-1

hosts: node-1

tasks:

ansible.builtin.set_fact:

some_variable: some_value

- name: Get a variable from node-1 on node-2

hosts: node-2

tasks:

ansible.builtin.debug:

msg: "The value of some_variable on node-1 is: hostvars['node-1']['some_variable']

Complexity

Sometimes you need a bit of complexity to finish a role or playbook. I would suggest to use this list, starting with “keep it simple”, going down to “hide your complexity here”.

default/main.yml (Or any other user-overwritable variable) - Keep this very simple. Users will interface with your code here.tasks/*.yml and handlers/*.yml - This can be a little more complex. Users are not expected to read this, some will peek here.vars/main.yml - This is where complexity lives. Keep all your data, filters and lookups here when possible.

Lint

Ansible lint is always right.

Well, that being said, you can overrule ansible-lint by setting the ` # noqa` argument. (Mind you: space-space-hash-space-identifier)

- name: Update all software (yum)

ansible.builtin.yum:

name: "*"

state: latest # noqa package-latest This role is to update packages.

Try to understand what Ansible lint is trying to tell you, likely you need to restructure your code until Ansible lint is happy.

General

- Optimize for readability.

- Spread things vertically when possible.

Ansible versions

Ansible has had a few changes in the used version. This table illustrates the versions and the relation to collections.

Sources:

- https://access.redhat.com/support/policy/updates/ansible-engine

- https://www.ansible.com/blog/getting-started-with-ansible-collections

- https://docs.ansible.com/ansible/latest/user_guide/collections_using.html

- https://docs.ansible.com/ansible/latest/reference_appendices/release_and_maintenance.html

- https://www.ansible.com/blog/ansible-3.0.0-qa

- https://docs.ansible.com/ansible/latest/roadmap/COLLECTIONS_2_10.html

- https://github.com/ansible-community/ansible-build-data

There are a couple of ways to loop in Terraform. Let’s have a look.

Count

This is the “oldest” method. It’s quite powerful.

resource "some_resource" "some_name" {

count = 3

name = "my_resource_${count.index}"

}

As you can see, the resource some_resource is being created 3 (count = 3) times.

The name should be unique, so the count.index variable is used. This variable is available when using count.

The variable count.index has these values:

| itteration |

count.index value |

| first |

0 |

| second |

1 |

| third |

2 |

And so on.

Counting and looking up values

The parameter count sounds simple, but is actually quite powerful. Lets have a look at this example.

Here is a sample .tfvars file:

virtual_machines = [

{

name = "first"

size = 16

},

{

name = "second"

size = 32

}

]

The above structure is a “list of maps”:

- List is indicated by the

[ and ] character.

- Map is indicated by the

{ and } character.

Now lets loop over that list:

resource "fake_virtual_machine" "default" {

count = length(var.virtual_machines)

name = var.virtual_machines[count.index].name

size = var.virtual_machines[count.index].size

}

A couple of tricks happen here:

count is calculated by the length function. It basically counts how many maps there are in the list virtual_machines.name is looked-up in the variable var.virtual_machines. Pick the first (0) entry from var.virtual_machines in the first itteration.- Similarly

size is looked up.

This results in two resources:

resource "fake_virtual_machine" "default" {

name = "first"

size = 16

}

# NOTE: This code does not work; `default` may not be repeated. It's just to explain what happens.

resource "fake_virtual_machine" "default" {

name = "second"

size = 32

}

For each

The looping mechanism for_each can also be used. Similar to the count example, let’s think of a data structure to make virtual machines:

virtual_machines = [

{

name = "first"

size = 16

},

{

name = "second"

size = 32

}

]

And let’s use for_each to loop over this structure.

resource "fake_virtual_machine" "default" {

for_each = var.virtual_machines

name = each.value.name

size = each.value.size

}

This pattern creates exactly the same resources as the count example.

Dynamic block

Imagine there is some block in the fake_virtual_machine resource, like this:

resource "fake_virtual_machine" "default" {

name = "example"

disk {

name = "os"

size = 32

}

disk {

name = "data"

size = 128

}

}

The variable structure we’ll use looks like this:

virtual_machines = [

{

name = "first"

disks [

{

name = "os"

size = 32

},

{

name = "data"

size = 128

}

]

},

{

name = "second"

disks [

{

name = "os"

size = 64

},

{

name = "data"

size = 256

}

]

}

]

As you can see:

- A list of

virtual_machines.

- Each virtual_machine has a list of

disks.

Now let’s introduce the dynamic block:

resource "fake_virtual_machine" "default" {

for_each = var.virtual_machines

name = each.value.name

dynamic "disk" {

for_each = each.value.disks

content {

name = disk.value.name

size = disk.value.size

}

}

}

Wow, that’s a lot to explain:

- The

dynamic "disk" { starts a dynamic block. The name (“disk”) must reflect the parameter in the resource, not juts any name. Now a new object is available; disk.

- The

for_each = each.value.disks loops the dynamic block. The loop uses disks from an already looped value var.virtual_machines.

- The

content { block will be rendered by Terraform.

- The

name = disk.value.name uses the disk variable (created by the block iterator disk) to find the value from the disks map.

Hope that helps a bit when writing loops in Terraform!

Looping is quite an important mechanism in coding. (Thanks @Andreas for the word coding, a mix of scripting and programming.)

Looping allows you to write a piece of logic once, and reuse it as many times are required.

Looping is difficult to understand for new people new to coding. It’s sometimes difficult for me to. This article will probably help me a bit too!

A sequence

The simplest loop I know is repeating a piece of logic for a set of number or letters, like this:

First off, here is how to generate a sequence:

| bash |

ansible |

terraform |

seq 1 3 |

with_sequence: start=1 end=3 |

range(1, 3) |

{1..3} |

|

|

For all languages, this returns a list of numbers.

| bash |

ansible |

terraform |

1 2 3 |

item: 1, item: 2, item: 3 |

[ 1, 2, 3, ] |

So, a more complete example for the three languages:

Bash

for number in {1..3} ; do

echo "number: ${number}

done

The above script returns:

Ansible

- name: show something

debug:

msg: "number: "

with_sequence:

start=1

end=3

Note: See that = sign, I was expecting a : so made an issue.

The above script returns:

ok: [localhost] => (item=1) => {

"msg": "1"

}

ok: [localhost] => (item=2) => {

"msg": "2"

}

ok: [localhost] => (item=3) => {

"msg": "3"

}

locals {

numbers = range(1,4)

}

output "number" {

value = local.numbers

}

The above script returns:

number = tolist([

1,

2,

3,

])

Ansible testing components

To test Ansible, I use quite some components. This page lists the components uses, their versions, and where they are used.

| Component |

Used |

Latest |

Used where |

| ansible |

2.9 |

2.9.18 |

tox.ini |

| ansible |

2.10 |

2.10.7 |

tox.ini |

| molecule |

>=3,<4 |

|

docker-github-action-molecule |

| tox |

latest |

n.a. |

docker-github-action-molecule |

| ansible-lint |

latest |

|

docker-github-action-molecule |

| pre-commit |

2.9.3 |

v2.10.1 |

nstalled on development desktop. |

| molecule-action |

2.6.16 |

|

.github/workflows/molecule.yml |

| github-action-molecule |

3.0.6 |

|

.gitlab-ci.yml |

| ubuntu |

20.04 |

20.04 |

.github/workflows/galaxy.yml |

| ubuntu |

20.04 |

20.04 |

.github/workflows/molecule.yml |

| ubuntu |

20.04 |

20.04 |

.github/workflows/requirements2png.yml |

| ubuntu |

20.04 |

20.04 |

.github/workflows/todo.yml |

| galaxy-action |

1.1.0 |

|

.github/workflows/galaxy.yml |

| graphviz-action |

1.0.7 |

|

.github/workflows/requirements2png.yml |

| checkout |

v2 |

|

.github/workflows/requirements2png.yml |

| checkout |

v2 |

|

.github/workflows/molecule.yml |

| todo-to-issue |

v2.3 |

|

.github/workdlows/todo.yml |

| python |

3.9 |

3.9 |

.travis.yml |

| pre-commit-hooks |

v3.4.0 |

|

.pre-commit-config.yaml |

| yamllint |

v1.26.0 |

v1.26.0 |

.pre-commit-config.yaml |

| my pre-commit |

v1.1.2 |

|

.pre-commit-config.yaml |

| fedora |

33 |

33 |

docker-github-action-molecule |

Debugging GitLab builds

Now that Travis has become unusable, I’m moving stuff to GitLab. Some builds are breaking, this is how to reproduce the errors.

Start the dind container

export role=ansible-role-dns

cd Documents/github/robertdebock

docker run --rm --name gitlabci --volume $(pwd)/${role}:/${role}:z --privileged --tty --interactive docker:stable-dind

Login to the dind container

docker exec --tty --interactive gitlabci /bin/sh

Install software

The dind image is Alpine based and misses required software to run molecule or tox.

apk add --no-cache python3 python3-dev py3-pip gcc git curl build-base autoconf automake py3-cryptography linux-headers musl-dev libffi-dev openssl-dev openssh

Tox

GitLab CI tries to run tox (if tox.ini is found). To emulate GitLab CI, run:

python3 -m pip install tox --ignore-installed

And simply run tox to see the results.

Molecule

For more in-depth troubleshooting, try installing molecule:

python3 -m pip install ansible molecule[docker] docker ansible-lint

Now you can run molecule:

cd ${role}

molecule test --destroy=never

molecule login

Digitalocean Sizes and Images

I tend to forget the output of doctl compute size list -t ${DIGITALOCEAN_TOKEN} and doctl compute image list -t ${DIGITALOCEAN_TOKEN} --public, so here is a dump if the two commands.

Sizes.

| slug |

memory (mb) |

vcpu |

disk (gb) |

price/month($) |

price/hour($) |

| s-1vcpu-1gb |

1024 |

1 |

25 |

5.00 |

0.007440 |

| 512mb |

512 |

1 |

20 |

5.00 |

0.007440 |

| s-1vcpu-2gb |

2048 |

1 |

50 |

10.00 |

0.014880 |

| 1gb |

1024 |

1 |

30 |

10.00 |

0.014880 |

| s-3vcpu-1gb |

1024 |

3 |

60 |

15.00 |

0.022320 |

| s-2vcpu-2gb |

2048 |

2 |

60 |

15.00 |

0.022320 |

| s-1vcpu-3gb |

3072 |

1 |

60 |

15.00 |

0.022320 |

| s-2vcpu-4gb |

4096 |

2 |

80 |

20.00 |

0.029760 |

| 2gb |

2048 |

2 |

40 |

20.00 |

0.029760 |

| s-4vcpu-8gb |

8192 |

4 |

160 |

40.00 |

0.059520 |

| m-1vcpu-8gb |

8192 |

1 |

40 |

40.00 |

0.059520 |

| c-2 |

4096 |

2 |

25 |

40.00 |

0.059520 |

| 4gb |

4096 |

2 |

60 |

40.00 |

0.059520 |

| c2-2vcpu-4gb |

4096 |

2 |

50 |

45.00 |

0.066960 |

| g-2vcpu-8gb |

8192 |

2 |

25 |

60.00 |

0.089290 |

| gd-2vcpu-8gb |

8192 |

2 |

50 |

65.00 |

0.096730 |

| m-16gb |

16384 |

2 |

60 |

75.00 |

0.111610 |

| s-8vcpu-16gb |

16384 |

8 |

320 |

80.00 |

0.119050 |

| m-2vcpu-16gb |

16384 |

2 |

50 |

80.00 |

0.119050 |

| s-6vcpu-16gb |

16384 |

6 |

320 |

80.00 |

0.119050 |

| c-4 |

8192 |

4 |

50 |

80.00 |

0.119050 |

| 8gb |

8192 |

4 |

80 |

80.00 |

0.119050 |

| c2-4vpcu-8gb |

8192 |

4 |

100 |

90.00 |

0.133930 |

| m3-2vcpu-16gb |

16384 |

2 |

150 |

100.00 |

0.148810 |

| g-4vcpu-16gb |

16384 |

4 |

50 |

120.00 |

0.178570 |

| so-2vcpu-16gb |

16384 |

2 |

300 |

125.00 |

0.186010 |

| m6-2vcpu-16gb |

16384 |

2 |

300 |

125.00 |

0.186010 |

| gd-4vcpu-16gb |

16384 |

4 |

100 |

130.00 |

0.193450 |

| m-32gb |

32768 |

4 |

90 |

150.00 |

0.223210 |

| so1_5-2vcpu-16gb |

16384 |

2 |

450 |

155.00 |

0.230650 |

| m-4vcpu-32gb |

32768 |

4 |

100 |

160.00 |

0.238100 |

| s-8vcpu-32gb |

32768 |

8 |

640 |

160.00 |

0.238100 |

| c-8 |

16384 |

8 |

100 |

160.00 |

0.238100 |

| 16gb |

16384 |

8 |

160 |

160.00 |

0.238100 |

| c2-8vpcu-16gb |

16384 |

8 |

200 |

180.00 |

0.267860 |

| m3-4vcpu-32gb |

32768 |

4 |

300 |

195.00 |

0.290180 |

| g-8vcpu-32gb |

32768 |

8 |

100 |

240.00 |

0.357140 |

| s-12vcpu-48gb |

49152 |

12 |

960 |

240.00 |

0.357140 |

| so-4vcpu-32gb |

32768 |

4 |

600 |

250.00 |

0.372020 |

| m6-4vcpu-32gb |

32768 |

4 |

600 |

250.00 |

0.372020 |

| gd-8vcpu-32gb |

32768 |

8 |

200 |

260.00 |

0.386900 |

| m-64gb |

65536 |

8 |

200 |

300.00 |

0.446430 |

| so1_5-4vcpu-32gb |

32768 |

4 |

900 |

310.00 |

0.461310 |

| m-8vcpu-64gb |

65536 |

8 |

200 |

320.00 |

0.476190 |

| s-16vcpu-64gb |

65536 |

16 |

1280 |

320.00 |

0.476190 |

| c-16 |

32768 |

16 |

200 |

320.00 |

0.476190 |

| 32gb |

32768 |

12 |

320 |

320.00 |

0.476190 |

| c2-16vcpu-32gb |

32768 |

16 |

400 |

360.00 |

0.535710 |

| m3-8vcpu-64gb |

65536 |

8 |

600 |

390.00 |

0.580360 |

| g-16vcpu-64gb |

65536 |

16 |

200 |

480.00 |

0.714290 |

| s-20vcpu-96gb |

98304 |

20 |

1920 |

480.00 |

0.714290 |

| 48gb |

49152 |

16 |

480 |

480.00 |

0.714290 |

| so-8vcpu-64gb |

65536 |

8 |

1200 |

500.00 |

0.744050 |

| m6-8vcpu-64gb |

65536 |

8 |

1200 |

500.00 |

0.744050 |

| gd-16vcpu-64gb |

65536 |

16 |

400 |

520.00 |

0.773810 |

| m-128gb |

131072 |

16 |

340 |

600.00 |

0.892860 |

| so1_5-8vcpu-64gb |

65536 |

8 |

1800 |

620.00 |

0.922620 |

| m-16vcpu-128gb |

131072 |

16 |

400 |

640.00 |

0.952380 |

| s-24vcpu-128gb |

131072 |

24 |

2560 |

640.00 |

0.952380 |

| c-32 |

65536 |

32 |

400 |

640.00 |

0.952380 |

| 64gb |

65536 |

20 |

640 |

640.00 |

0.952380 |

| c2-32vpcu-64gb |

65536 |

32 |

800 |

720.00 |

1.071430 |

| m3-16vcpu-128gb |

131072 |

16 |

1200 |

785.00 |

1.168150 |

| m-24vcpu-192gb |

196608 |

24 |

600 |

960.00 |

1.428570 |

| g-32vcpu-128gb |

131072 |

32 |

400 |

960.00 |

1.428570 |

| s-32vcpu-192gb |

196608 |

32 |

3840 |

960.00 |

1.428570 |

| so-16vcpu-128gb |

131072 |

16 |

2400 |

1000.00 |

1.488100 |

| m6-16vcpu-128gb |

131072 |

16 |

2400 |

1000.00 |

1.488100 |

| gd-32vcpu-128gb |

131072 |

32 |

800 |

1040.00 |

1.547620 |

| m-224gb |

229376 |

32 |

500 |

1100.00 |

1.636900 |

| m3-24vcpu-192gb |

196608 |

24 |

1800 |

1175.00 |

1.748510 |

| g-40vcpu-160gb |

163840 |

40 |

500 |

1200.00 |

1.785710 |

| so1_5-16vcpu-128gb |

131072 |

16 |

3600 |

1240.00 |

1.845240 |

| m-32vcpu-256gb |

262144 |

32 |

800 |

1280.00 |

1.904760 |

| gd-40vcpu-160gb |

163840 |

40 |

1000 |

1300.00 |

1.934520 |

| so-24vcpu-192gb |

196608 |

24 |

3600 |

1500.00 |

2.232140 |

| m6-24vcpu-192gb |

196608 |

24 |

3600 |

1500.00 |

2.232140 |

| m3-32vcpu-256gb |

262144 |

32 |

2400 |

1565.00 |

2.328870 |

| so1_5-24vcpu-192gb |

196608 |

24 |

5400 |

1850.00 |

2.752980 |

| so-32vcpu-256gb |

262144 |

32 |

4800 |

2000.00 |

2.976190 |

| m6-32vcpu-256gb |

262144 |

32 |

4800 |

2000.00 |

2.976190 |

| so1_5-32vcpu-256gb |

262144 |

32 |

7200 |

2480.00 |

3.690480 |

images

| slug |

distribution |

disk (gb) |

name |

| centos-6-x32 |

CentOS |

20 |

6.9 x32 |

| centos-6-x64 |

CentOS |

20 |

6.9 x64 |

| ubuntu-16-04-x32 |

Ubuntu |

20 |

16.04.6 (LTS) x32 |

| freebsd-12-x64 |

FreeBSD |

20 |

12.1 ufs x64 |

| rancheros |

RancherOS |

20 |

v1.5.6 |

| centos-8-x64 |

CentOS |

15 |

8.2 x64 |

| debian-10-x64 |

Debian |

15 |

10 x64 |

| debian-9-x64 |

Debian |

15 |

9 x64 |

| freebsd-11-x64-zfs |

FreeBSD |

15 |

11.4 zfs x64 |

| freebsd-11-x64-ufs |

FreeBSD |

15 |

11.4 ufs x64 |

| centos-7-x64 |

CentOS |

20 |

7.6 x64 |

| fedora-32-x64 |

Fedora |

15 |

32 x64 |

| ubuntu-18-04-x64 |

Ubuntu |

15 |

18.04 (LTS) x64 |

| ubuntu-20-04-x64 |

Ubuntu |

15 |

20.04 (LTS) x64 |

| ubuntu-16-04-x64 |

Ubuntu |

15 |

16.04 (LTS) x64 |

| ubuntu-20-10-x64 |

Ubuntu |

15 |

20.10 x64 |

| fedora-33-x64 |

Fedora |

15 |

33 x64 |

| freebsd-12-x64-ufs |

FreeBSD |

20 |

12.2 ufs x64 |

| freebsd-12-x64-zfs |

FreeBSD |

15 |

12.2 zfs x64 |

| freebsd-12-1-x64-ufs |

FreeBSD |

20 |

12.1 ufs x64 |

| freebsd-12-1-x64-zfs |

FreeBSD |

20 |

12.1 zfs x64 |

| skaffolder-18-04 |

Ubuntu |

25 |

Skaffolder 3.0 on Ubuntu 18.04 |

| izenda-18-04 |

Ubuntu |

20 |

Izenda 3.3.1 on Ubuntu 18.04 |

| quickcorp-qcobjects-18-04 |

Ubuntu |

25 |

QCObjects 2.1.157 on Ubuntu 18.04 |

| fathom-18-04 |

Ubuntu |

25 |

Fathom on 18.04 |

| optimajet-workflowserver-18-04 |

Ubuntu |

25 |

WorkflowServer 2.5 on Ubuntu 18.04 |

| nimbella-18-04 |

Ubuntu |

25 |

Nimbella Lite on Ubuntu 18.04 |

| snapt-snaptaria-18-04 |

Ubuntu |

25 |

Snapt Aria 2.0.0 on Ubuntu 18.04 |

| snapt-snaptnova-18-04 |

Ubuntu |

25 |

Snapt Nova ADC (Load Balancer, WAF) 1.0.0 on Ubuntu 18.04 |

| weconexpbx-7-6 |

CentOS |

25 |

WeconexPBX 2.4-1 on CentOS 7.6 |

| bitwarden-18-04 |

Ubuntu |

50 |

Bitwarden 1.32.0 on Ubuntu 18.04 |

| buddy-18-04 |

Ubuntu |

160 |

Buddy on Ubuntu 18.04 |

| sharklabs-minecraftjavaedi-18-04 |

Ubuntu |

25 |

Minecraft: Java Edition Server 1.0 on Ubuntu 18.04 |

| selenoid-18-04 |

Ubuntu |

25 |

Selenoid 1.10.0 on Ubuntu 18.04 |

| litespeedtechnol-openlitespeednod-18-04 |

Ubuntu |

25 |

OpenLiteSpeed NodeJS 12.16.3 on Ubuntu 20.04 |

| simontelephonics-freepbx-7-6 |

CentOS |

25 |

FreePBX® 15 on CentOS 7.6 |

| buddy-repman-18-04 |

Ubuntu |

25 |

Repman 0.4.1 on Ubuntu 18.04 (LTS) |

| strapi-18-04 |

Ubuntu |

50 |

Strapi 3.1.0 on Ubuntu 18.04 |

| wftutorials-purdm-18-04 |

Ubuntu |

25 |

Purdm 0.3a on Ubuntu 18.04 |

| caprover-18-04 |

Ubuntu |

25 |

CapRover 1.8.0 on Ubuntu 18.04 |

| searchblox-searchbloxenterp-7-6 |

CentOS |

320 |

SearchBlox Enterprise Search 9.2.1 on CentOS 7.6 |

| gitea-18-04 |

Ubuntu |

25 |

Gitea 1.12.4 on Ubuntu 20.04 |

| kandralabs-zulip-18-04 |

Ubuntu |

50 |

Zulip 3.2 on Ubuntu 18.04 |

| vodianetworks-vodiaphonesystem-10 |

Debian |

25 |

Vodia Multi-tenant Cloud PBX 66 on Debian 10 x64 |

| flipstarter-18-04 |

Ubuntu |

25 |

Flipstarter 1.1.1 on Ubuntu 18.04 |

| gluu-gluuserverce-18-04-3 |

Ubuntu |

160 |

Gluu Server CE 4.2.1 on Ubuntu 20.04 (LTS) |

| netfoundry-7-6 |

CentOS |

25 |

NetFoundry Zero Trust Networking 7.3.0 on CentOS 7.8 |

| aplitel-vitalpbx-7 |

CentOS |

25 |

VitalPBX 3.0.4-1 on Centos 7.8 |

| cloudron-18-04 |

Ubuntu |

25 |

Cloudron 5.6.3 on Ubuntu 18.04 |

| sharklabs-pacvim-18-04 |

Ubuntu |

25 |

PacVim on Ubuntu 18.04 |

| eltrino-magento2opensour-18-04 |

Ubuntu |

80 |

Magento 2 Open Source 1.3.1 on Ubuntu 20.04 (LTS) |

| solidinvoice-18-04 |

Ubuntu |

25 |

SolidInvoice 2.0.3 on Ubuntu 18.04 |

| opencart-18-04 |

Ubuntu |

25 |

OpenCart 3.0.3 on Ubuntu 18.04 |

| unlight-openunlight-18-04 |

Ubuntu |

30 |

Open Unlight 1.0.0.pre1 on Ubuntu 18.04 |

| supabase-supabaserealtime-18-04 |

Ubuntu |

20 |

Supabase Realtime 0.7.5 on Ubuntu 18.04 |

| runcloud-18-04 |

Ubuntu |

25 |

RunCloud-18.04 on Ubuntu 18.04 |

| runcloud-runcloud2004-20-04 |

Ubuntu |

25 |

RunCloud-20.04 on Ubuntu 20.04 |

| supabase-supabasepostgres-18-04 |

Ubuntu |

25 |

Supabase Postgres 0.13.0 on Ubuntu 18.04 |

| nmtec-erxes-18-04 |

Ubuntu |

80 |

Erxes 0.17.6 on Ubuntu 18.04 |

| fastnetmon-18-04 |

Ubuntu |

25 |

FastNetMon 2.0 on Ubuntu 18.04 |

| cyberscore-18-04 |

Ubuntu |

25 |

CyberScore 5.0.1 on Ubuntu 18.04.3 |

| shiftedit-serverwand-18-04 |

Ubuntu |

25 |

ServerWand 1.0 on Ubuntu 18.04 |

| ultrahorizon-uhvpn-18-04 |

Ubuntu |

25 |

UH VPN 1.2.0 on Ubuntu 20.04 |

| meilisas-meilisearch-10 |

Debian |

25 |

MeiliSearch 0.16.0 on Debian 10 (buster) |

| helpy-18-04 |

Ubuntu |

25 |

Helpy 2.4 on 18.04 |

| deadcanaries-onionroutedcloud-18-04 |

Ubuntu |

25 |

Onion Routed Cloud 14 on 18.04 |

| dokku-18-04 |

Ubuntu |

20 |

Dokku 0.17.9 on 18.04 |

| mysql-18-04 |

Ubuntu |

20 |

MySQL on 18.04 |

| phpmyadmin-18-04 |

Ubuntu |

20 |

PhpMyAdmin on 18.04 |

| jenkins-18-04 |

Ubuntu |

25 |

CloudBees Jenkins on 18.04 |

| influxdb-18-04 |

Ubuntu |

25 |

Influx TICK on 18.04 |

| invoiceninja-18-04 |

Ubuntu |

25 |

Invoice Ninja 1.0.0 on Ubuntu 18.0.4 |

| zeromon-zabbix-18-04 |

Ubuntu |

25 |

Zeromon Zabbix 4 on Ubuntu 18.04 |

| lemp-18-04 |

Ubuntu |

20 |

LEMP on 18.04 |

| nakama-18-04 |

Ubuntu |

25 |

Nakama 2.7.0 on Ubuntu 18.04 |

| redash-18-04 |

Ubuntu |

30 |

Redash 8.0.0 on Ubuntu 18.04 |

| mattermost-18-04 |

Ubuntu |

20 |

Mattermost 5.16.3 on Ubuntu 18.04 |

| rethinkdb-rethinkdbfantasi-18-04 |

Ubuntu |

25 |

RethinkDB (Fantasia) 2.3.7 on Ubuntu 18.04 |

| openlitespeed-wp-18-04 |

Ubuntu |

25 |

OpenLiteSpeed WordPress 5.3 on Ubuntu 18.04 |

| sharklabs-ninjam-10-0 |

Debian |

25 |

Ninjam on Debian 10.0 x64 |

| workarea-18-04 |

Ubuntu |

25 |

Workarea 3.5.x on Ubuntu 18.04 |

| gitlab-meltano-18-04 |

Ubuntu |

25 |

Meltano 1.15.0 on Ubuntu 18.04 |

| rocketchat-18-04 |

Ubuntu |

25 |

Rocket.Chat 2.4.9 on Ubuntu 18.04 |

| reblaze-reblazewaf-18-04 |

Ubuntu |

25 |

Reblaze WAF 2.12.10 on Ubuntu 18.04 |

| sharklabs-nodejsquickstart-18-04 |

Ubuntu |

25 |

Node.js Quickstart 1.0 on Ubuntu 18.04 |

| rails-18-04 |

Ubuntu |

20 |